- News

- Reviews

- Bikes

- Accessories

- Accessories - misc

- Computer mounts

- Bags

- Bar ends

- Bike bags & cases

- Bottle cages

- Bottles

- Cameras

- Car racks

- Child seats

- Computers

- Glasses

- GPS units

- Helmets

- Lights - front

- Lights - rear

- Lights - sets

- Locks

- Mirrors

- Mudguards

- Racks

- Pumps & CO2 inflators

- Puncture kits

- Reflectives

- Smart watches

- Stands and racks

- Trailers

- Clothing

- Components

- Bar tape & grips

- Bottom brackets

- Brake & gear cables

- Brake & STI levers

- Brake pads & spares

- Brakes

- Cassettes & freewheels

- Chains

- Chainsets & chainrings

- Derailleurs - front

- Derailleurs - rear

- Forks

- Gear levers & shifters

- Groupsets

- Handlebars & extensions

- Headsets

- Hubs

- Inner tubes

- Pedals

- Quick releases & skewers

- Saddles

- Seatposts

- Stems

- Wheels

- Tyres

- Health, fitness and nutrition

- Tools and workshop

- Miscellaneous

- Tubeless valves

- Buyers Guides

- Features

- Forum

- Recommends

- Podcast

news

Google driverless car meets cyclist

Google driverless car meets cyclistThe ethics of self-driving car collisions: whose life is more important?

In an unavoidable collision involving a robotic driverless car, who should die? That’s the ethical question being pondered by automobile companies as they develop the new generation of cars.

Stanford University researchers are helping the industry to devise a new ethical code for life-and-death scenarios.

According to Autonews, Dieter Zetsche, the CEO of Daimler AG, asked at a conference: “if an accident is really unavoidable, when the only choice is a collision with a small car or a large truck, driving into a ditch or into a wall, or to risk sideswiping the mother with a stroller or the 80-year-old grandparent. These open questions are industry issues, and we have to solve them in a joint effort.”

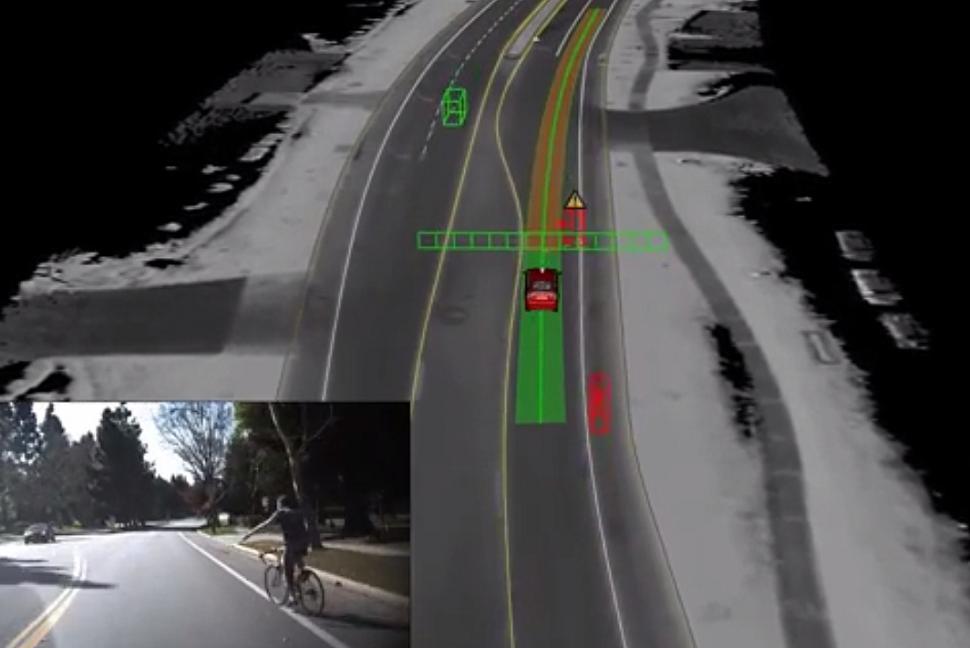

Google’s own self driving car gives cyclists extra space if it spots them in the lane, which theoretically puts the inhabitants of the car at greater risk of collision, but does it anyway. This is an ethical choice.

“Whenever you get on the road, you’re making a trade-off between mobility and safety,” Noah Goodall, a researcher at the University of Virginia.

“Driving always involves risk for various parties. And anytime you distribute risk among those parties, there’s an ethical decision there.”

Google is constantly making decisions based around information and safety risks, asking the following questions in a constant loop.

1. How much information would be gained by making this maneuver?

2. What’s the probability that something bad will happen?

3. How bad would that something be? In other words, what’s the “risk magnitude”?

In an example published in Google’s patent, says Autonews, “getting hit by the truck that’s blocking the self-driving car’s view has a risk magnitude of 5,000. Getting into a head-on crash with another car would be four times worse -- the risk magnitude is 20,000. And hitting a pedestrian is deemed 25 times worse, with a risk magnitude of 100,000.

“Google was merely using these numbers for the purpose of demonstrating how its algorithm works. However, it’s easy to imagine a hierarchy in which pedestrians, cyclists, animals, cars and inanimate objects are explicitly protected differently.”

We recently reported how Google has released a new video showing how its self-driving car is being taught to cope with common road situations such as encounters with cyclists. We’d far rather share the road with a machine that’s this courteous and patient than a lot of human drivers.

We’ve all been there. You need to turn across the traffic, but you’re not quite sure where, so you’re a bit hesitant, perhaps signalling too early and then changing your mind before finally finding the right spot.

Do this in a car and other drivers just tut a little. Do it on a bike and some bozo will be on the horn instantly and shouting at you when he gets past because you’ve delayed him by three-tenths of a nanosecond.

But not if the car’s being controlled by Google’s self-driving system. As you can see in this video, the computer that steers Google’s car can recognise a cyclist and knows to hold back when it sees a hand signal, and even to wait if the rider behaves hesitantly.

Latest Comments

- bensynnock 14 min 10 sec ago

If the left and right lanes are diverging further up the road, which I expect they are, there's no reason not to drive along the left lane. ...

- slc 1 hour 6 min ago

Lavable neigbourhoods? Could be handy on bins day

- mctrials23 1 hour 30 min ago

Are you seriously suggesting that motorists apply even a modacum of logic or balance to their hatred for cyclists.

- chrisonabike 2 hours 22 min ago

Wait - have those wafers been upgraded to chocolate wafers now (or maybe those pink ones?) Or is it all the sugar in the communion wine?

- chrisonabike 2 hours 28 min ago

Council: the project will bring about “a greener, more accessible, liveable and safer town centre, which meets the needs of people walking,...

- Scribe Cycling 3 hours 6 min ago

Hi username983, thank you for the comment, and it's great to hear you rate the wheels. The new models have a slightly different design which come...

- wtjs 3 hours 27 min ago

I'm pleased to say I didn't 'do' Latin, but I was also pleased to learn the origin of 'laconic'

- Surreyrider 3 hours 32 min ago

Looks like Specialized dusted off their blueprint for the Tarmac circa 2012-14 to 'create' the Aethos.

- Tom_77 3 hours 39 min ago

Was looking for things to do in Winchester and came across this - Adrenaline Comedy Bike Tour Experience at B3335...

- whosatthewheel 4 hours 11 min ago

A re-offender too, having been disqualified for speeding in the past. I don't believe in the "reaching for a chewing gum" bollocks either. More...

Add new comment

69 comments

And read the bit after the / can't afford, i know this might come as a shock to some, but there are people about who don't have the money, or who are not old enough, or don't have driving licences. The are people for whom not having a car is not a life style choice but rather a circumstance that is imposed upon them.

Or do you believe that bikes are toys and can never be anything else?

When my bike was a small (micro?) goods vehicle it was virtually indispensible. When I was a kid, likewise. The two circumstances are connected by their use as a conduit for some other function. But anything which is used for the sake of using it, whether it's a bike, a motorbike, a boat or an XBOX, is a toy. A bicycle has the saving grace of improving health of course, but you could do that by jogging.

By your definition a car is then also a "toy".

I mostly use my bike for short urban journeys cause I hate driving... "toy" or "not toy"?

No, I want it not to be seen as a hobby for the moneyed middle classes. I want it to be recognised that its a more space-efficient, healthier, and less dangerous way to get around. Ultimately I'd like all those I know who mostly use public transport to feel safe using a bike instead.

So your point is moot, rather.

You mean it might wait until it's safe to overtake and give plenty of room?

Yeah, wouldn't that just be bloody awful.

Its interesting that Google is making formal decisions which are already made, but informally and not really acknowledged. I suspect that the resulting choice is made differently. I am coming to welcome these self driving cars.

Indeed. And yet there are those 'instinctive' decisions that are invariably wrong. Slamming on the brakes when a cat/dog/fox/squirrel runs out, causing multiple collisions behind (P.S. don't bother explaining about driving with adequate room to stop, etc, everyone does it).

Or being overtaken approaching a junction and something emerging from the right and the overtaking car swerving in (P.S. don't bother explaining about how you still have to give way to the left when emerging left from a T junction, everyone does it. And yes, don't overtake through a junction, but again, so many people do it).

I'm sure there are numerous other examples where the instinctive human response to a sudden, unforeseeable event is different from what a computer would decide.

Your point about everyone does it, what happens when you have a computer running to rules driving rather than a person ignoring the rules?

Clearly, the computerised vehicle would check that the space in front of it was clear when pulling out of a side road.

Pages